Dear readers, With the launch of e-newsletter CUHK in Focus, CUHKUPDates has retired and this site will no longer be updated. To stay abreast of the University’s latest news, please go to https://focus.cuhk.edu.hk. Thank you.

Perfecting “Deep Learning” for Advanced Image Recognition

Prof. Wang Xiaogang

Department of Electronic Engineering

In the sci-fi movie Minority Report, it took Tom Cruise scouring his way through millions of images to identify crimes before they happened – and prevent them. There was always a human manipulating the data. But thanks to the work of a CUHK professor, computers may be able to sort out what’s happening on their own.

Computer scientist Wang Xiaogang of the Department of Electronic Engineering at CUHK has improved the way that machines sort images to the point where they are able to match the human ability to recognize faces. He is taking that expertise and expanding on it so that computers will be better equipped to detect and identify objects and actions – including what people are doing. The computer can report that data without requiring a human to sift through millions of images.

Professor Wang is using a technique known as Deep Learning to mimic the processes of the mind. Deep Learning, a method of structuring computer networks that has been championed by Professor Geoffrey Hinton of the University of Toronto and Google, uses parallel computing to pass information between millions of computation units to simulate the way the neural networks of the human mind work. Once under way, the interaction between the units helps them to “learn” the increasing complexity of millions of parameters without a human being having to keep programming different instructions.

The most common deep-learning algorithms use convolutional layers, nonlinear layers and pooling layers to form a hierarchy that extracts features from input data. Such multi-layered computing is being used for a wide variety of functions, such as identifying possible cases of breast cancer using microscopic images, enhancing speech recognition, and identifying Chinese characters.

Scientists have been studying ways of replicating the brain’s neural network since the 1940s. There was a wave of interest when computers first went mainstream in the 1980s. But the computational power still wasn't sufficient, and the interest waned. Another problem was the lack of large-scale databases that would allow for training complex neural networks with millions of parameters.

The brain’s visual cortex has six layers of neural interaction that help it recognize objects. We can use the information not only to recognize an object but also to imagine what the rest of it looks like, or how a face might look in different conditions or with different expressions.

In the last decade, there has been a resurgence in mimicking the brain. High-powered GPUs, or graphic-processing units, drive almost infinitely better results. Whereas a traditional computer nowadays runs on one to eight cores, each GPU consists of more than 1,000 cores, enhancing dramatically how much data it can sort and how quickly it can process data.

“To train a neural network used to take one month,” Professor Wang says. “Now it takes 10 hours. It’s like training a baby, ‘This is your mummy, this is your daddy.’ That’s why the GPU is so important.”

“Big data” has also come to the fore, with digital media allowing for databases with millions of images for training neural networks.

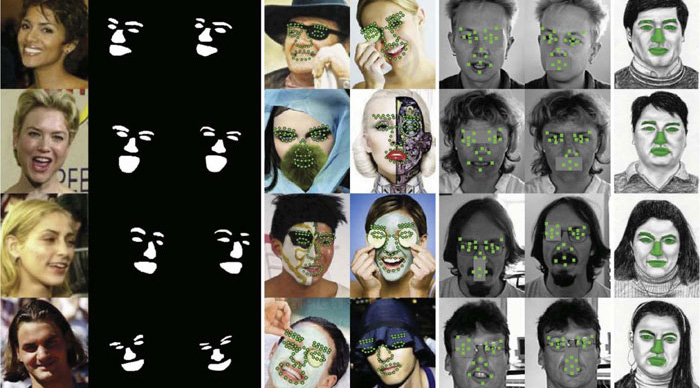

Professor Wang’s group has been the first to apply Deep Learning to the detection of certain parts of the face, as well as the alignment of a face and the segmentation of the body. He has also trained his computer systems to recognize what a person is doing in an image — laughing, eating, talking on a phone — and estimate what kind of pose somebody is in.

After developing a new facial-recognition system, Professor Wang tested it against a data set known as “Labeled Faces in the Wild,” a database of thousands of faces collected from the Internet. He has also tackled the problem of “occlusion” — identifying a person in a crowd, or when only part of him or her shows in an image. Through Deep Learning, his hierarchical computer system can also figure out what parts of a person are in an image, and what position he or she is in.

Humans can recognize the similarity between two cropped faces 97.5 per cent of the time, and the accuracy increases to 99.2 per cent when they’re shown a complete picture. Prior to the introduction of Deep Learning, the most-advanced computers were pitching at 96.3 per cent. But Professor Wang has been able to boost performance to 99.15 per cent – a breakthrough in that computers now have essentially the same success rate as humans.

The improvement comes from increasing the “depth” of Deep Learning by introducing more layers of analysis, as well as getting those multiple layers to share information and re-use components.

Professor Wang has been able to outperform other scientists in facial-recognition tests, including those at Facebook, something he attributes to experience and a willingness to share information.

“Many computer scientists treat Deep Learning as a black box,” Professor Wang says, using their own image database and keeping it in house. “We will open this box and carefully design this internal structure, by incorporating our research into computer vision conducted over the last 10 years.”

In August, Professor Wang’s team came second, behind only Google, out of 38 teams taking on the ImageNet object detection challenge. The test requires identifying objects out of 40,000 images collected from the Internet and placing them within 200 categories.

Professor Wang’s next challenge is to turn the attention of his machines to recognizing what people within crowds of thousands of pedestrians are doing, for instance. It’s far more complex than facial recognition given the large number of people involved and the wide variety of ways they interact. Another challenge is to improve the sophistication of facial recognition so that, for instance, a computer could take a side view of a face and recreate a full-frontal image.

By Alex Frew McMillan

This article was originally published on CUHK Homepage in Nov 2014.