Dear readers, With the launch of e-newsletter CUHK in Focus, CUHKUPDates has retired and this site will no longer be updated. To stay abreast of the University’s latest news, please go to https://focus.cuhk.edu.hk. Thank you.

Like Water Like Rays

Wong Tien-tsin's innovation makes a visual difference

When computer scientist Prof. Wong Tien-tsin began working on computer graphics, video gamers were struggling to churn out dodgy approximations of a fire or a wave. The graphics flickered, but in a bad way, based on a time-consuming assembly process using triangular meshes. The game was based on a physical simulation of a real world.

Now, Professor Wong is recreating still images and video footage that can capture the complexity of what we see in real life. His work takes ultra-high-definition content a step farther. With his images, our sense of depth improves, so that the Greek lettering engraved on an ancient temple really appears embossed into the stone, as it would in real life. Even the shadows inside each character become visible.

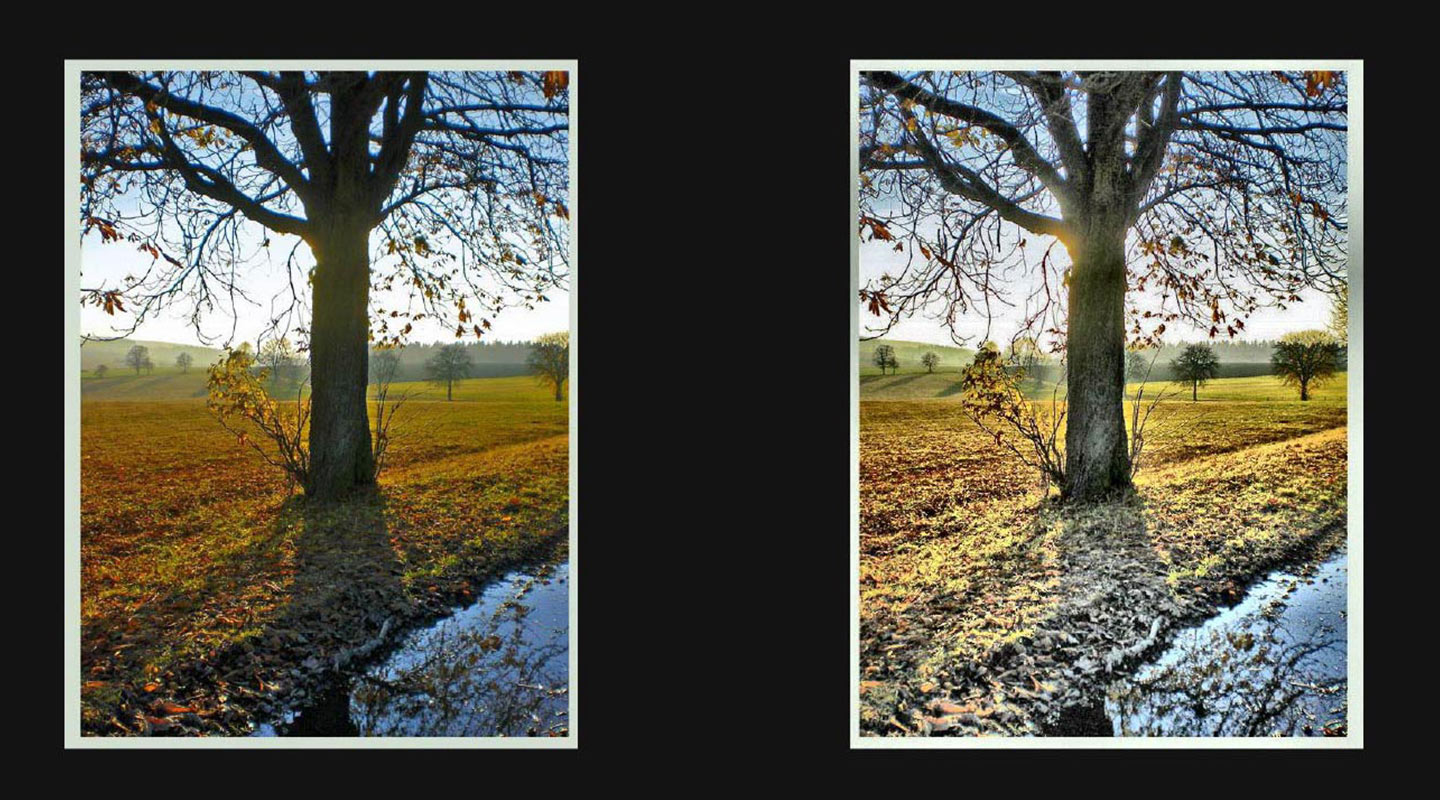

It is with sunlight and water that the technology really comes into its own. In typical imagery, we are never dazzled by what we see on screen, in the same way we can be by light in real life. Professor Wong blends two images that contain slightly different information to enhance the combined result.

So a sunburst through a thick covering of trees and leaves becomes dazzlingly brilliant, with the rays themselves showing depth. By capturing superfine detail and the layers of rays, we see light shining through foliage or clouds that’s just as vibrant as what we see with the naked eye.

Our brains already naturally fuse the two slightly different images seen by our left and our right eye into one seamless image, an unconscious process called binocular single vision. This combination of the slight disparity between what the two eyes see is what enables us to visualize items in three dimensions, with depth.

It is this natural mental skill that Professor Wong uses to his advantage, by making the two renderings slightly different to combine for enhanced effect. Our brains do not only combine slight differences in positioning, but can also fuse differences in contour, intensity and colour. The fusing goes beyond the simple blending of two images to produce a lifelike final result.

Professor Wong plays with how our brain views different renderings of an image, so he can create a variety of effects. At its most basic, he uses one image to contain high contrast in colour, while the other image contains fine detail. What we now normally see on a TV screen, for instance, tends to lose detail when contrast increases, or flatten the image if detail is preserved.

Professor Wong’s combination of images produces an image that both contains high colour contrast between colours, and between bright light and darkness, as well as containing very fine detail like the writing on a building, or the cracks in its walls. The effect is to render the image in something approaching the kind of visual acuity that we see so naturally when looking at our three-dimensional world.

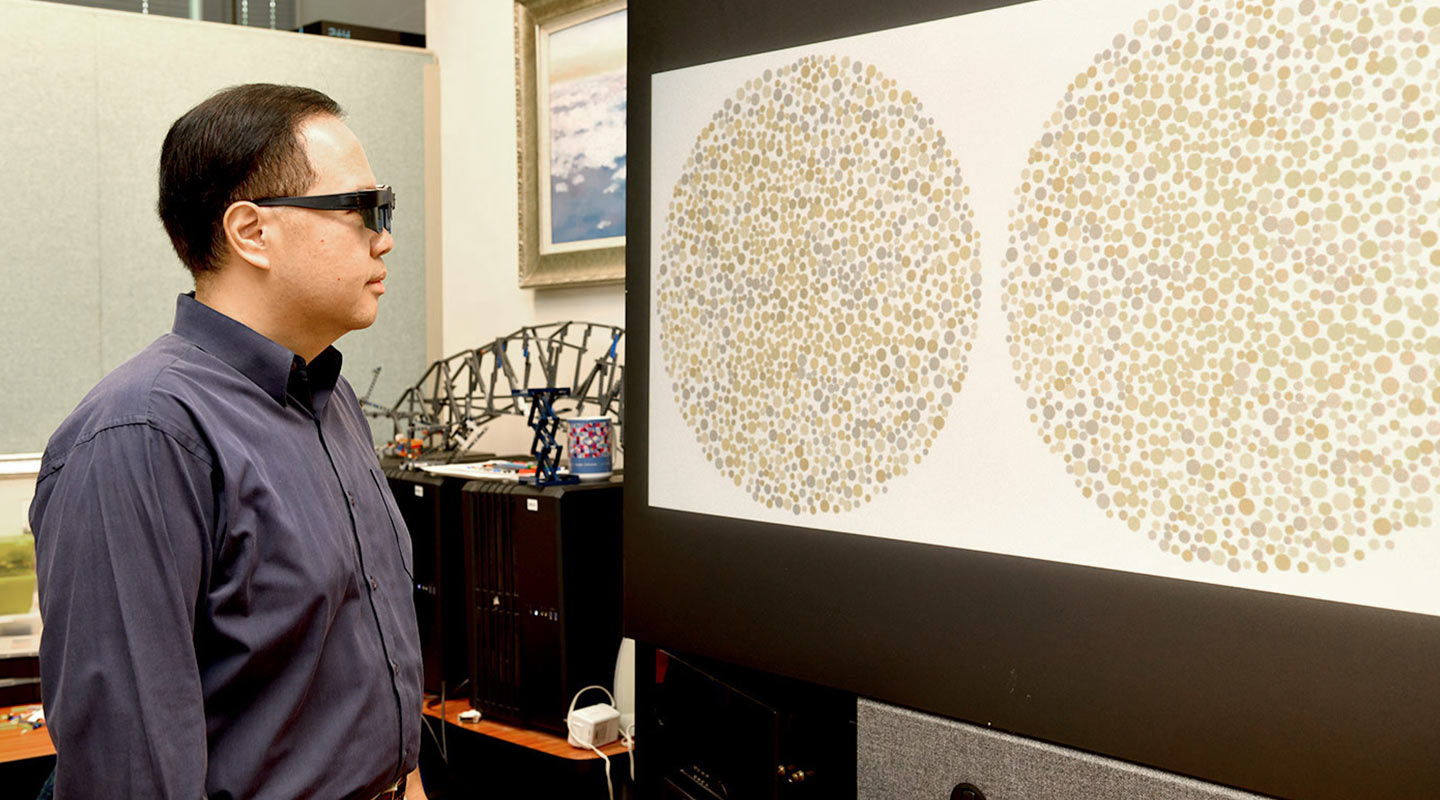

It’s also possible to create different experiences for different kinds of viewers. Professor Wong’s research has produced enhanced video images, for instance, for colourblind people.

Around 250 million people, close to 4% of the global population, have some form of ‘colour-vision deficiency’ – or colourblindness. Yet few if any entertainment products such as video games or movies cater to them, even though playing a popular game such as Candy Crush is impossible. Likewise, video hardware does nothing to accommodate them.

Through the use of glasses, Professor Wong and his doctoral protégé Shen Wuyao have developed content that delivers a better experience for colourblind people by binocular mapping. Shen’s doctoral thesis is devoted to the topic.

The content appears perfectly normal to someone with normal vision. But when a colourblind person dons ordinary 3D glasses, they receive modified images to the right and left eye that change and offset the colour. Colourblind people are still normally able to detect the change in the colour of an image, and the two separate images enhance this distinguishability of the colours.

The technology may have medical use. Professor Wong is working with an eye hospital on the prospect to develop dual-image content that could help people who have a 'lazy eye,' to encourage that eye to be more active.

When he began his career, Professor Wong, who holds a post in CUHK’s Department of Computer Science & Engineering, worked only on still images. The challenge in recreating a lifelike image by combining different images is to avoid binocular rivalry, when our eyes compete. That makes the image uncomfortable to look at, as we might be familiar with when we view optical illusions.

It took a step up in computing to improve work on still images and then apply the concept to video footage, which essentially combines many individual images. Through computer algorithms, he applied a Visual Difference Predictor, or VDP, that estimates the optimal range of variation between the left and right images. Then a Binocular Visual Comfort Predictor, or BVCP, anticipates what maximum amount of image disparity we can tolerate, while containing as wide a range of variance as possible.

To apply this to realistic moving images means the work cannot be done by human input, as was achieved by early coders of video games. This would be far too time-consuming for video. Instead, the use of artificial intelligence allowed Professor Wong and his team to reformulate the content for moving images, the computer anticipating the necessary next image in a ‘movie'. Now they can generate real-time ‘temporal-coherent’ videos, meaning we can watch them live while finding the content realistic as well as rich.

This advance suggests that these enhanced images, or colourblind-ready images, can be produced for commercial products in short order. The technology not only improves the experience for viewers, it could also lead to advances in hardware, in terms of televisions, movie screens, projectors and displays. Through a combination of hardware and software, it should be practical to produce an affordable television that could have extra functionality to serve colourblind people, or others with impaired vision, if they choose that setting.

Professor Wong anticipates that the next generation of computer processors will improve on the current pace of 20 frames per second achieved with current computing power to 30 images per second. That would allow the live broadcast video images with the new, rich visual content he has developed. Better, richer content, in other words, is well on its way.

By Alex Frew McMillan

This article was originally published on CUHK Homepage in Jul 2019.