Dear readers, With the launch of e-newsletter CUHK in Focus, CUHKUPDates has retired and this site will no longer be updated. To stay abreast of the University’s latest news, please go to https://focus.cuhk.edu.hk. Thank you.

Assessing Extreme Risk: A CUHK statistician produces a better way of measuring unlikely probabilities

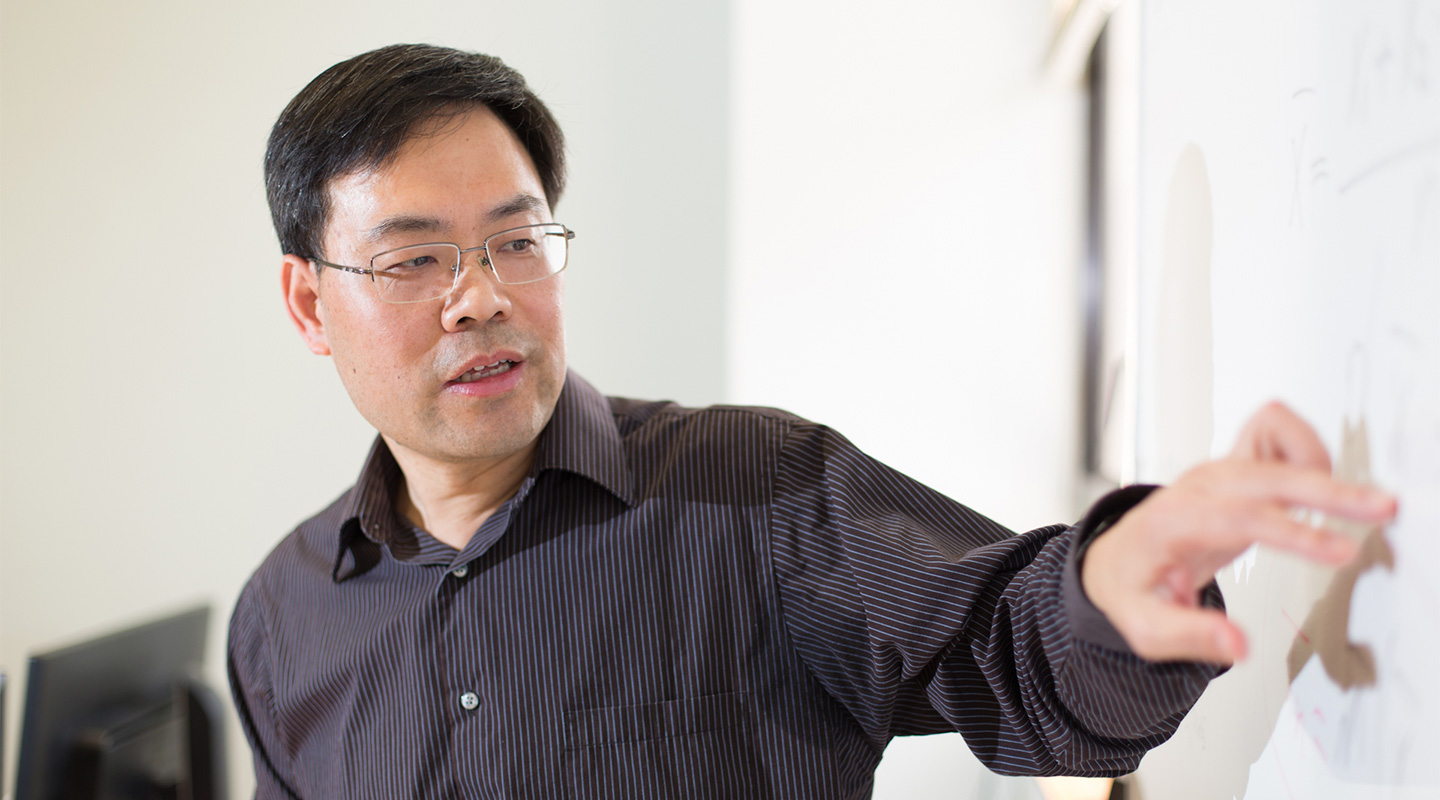

Prof. Shao Qi-man

Department of Statistics

Through his work on probability and statistics, Prof. Shao Qi-Man has provided a solid theoretical justification for estimating exceptionally unlikely events. Those techniques apply to a variety of applications, in areas as diverse as insurance, genetics, biology and political polling.

His work helps assess the probability of extreme risks, where for instance the potential loss for an insurer or a hedge fund is very large but also very unlikely. His research has been used to identify the structural effects of interest, as well as to assess the likely development of tumors, given the exponential expansion of tumor cells.

"I like to work on problems that are important in theory but also have potential applications to other areas," Professor Shao says. "I like to prove results which are elegant with neat assumptions."

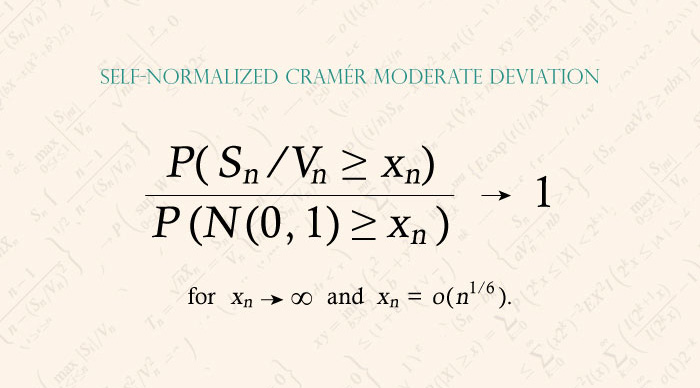

Professor Shao spent two years developing the theory behind his most seminal paper, on what are known as self-normalized large deviations. He wrote the first paper that established the way to use self-normalized large deviation theory to analyze large amounts of data, improving on the risk of statistical interference when assessing extreme risk.

Professor Shao, the chairman of the Department of Statistics at CUHK, is building on theory first promulgated by the Swedish mathematician Harald Cramér. It was Cramér who established the large-deviation theorem and applied it to the insurance industry. Cramér's work enabled insurance companies to predict with a large degree of accuracy the chance that total revenue from insurance policies would exceed the amount paid out in claims. By applying the strategy, companies could set their insurance-policy prices at a level that, as closely as possible, would ensure the company would turn a profit.

Cramér's large-deviation theorem improves on central-limit theorem, first proposed in the 18th century. Central-limit theory assumes that, just like rolling two unbiased dice or picking lottery numbers, the probability of a certain outcome will gravitate around a traditional bell curve or normal curve, using that to estimate the probability.

Central-limit theory assumes that the gap between normal tail distribution and the true probability is small. However, when the probability is extremely small, the gap between the normal tail and the actual distribution can be very large in degree of magnitude: the difference, for instance, between 0.01 and 0.0001 is very small, but 0.01 is nowhere near 0.0001.

Professor Shao examines what's known as asymptotic theory, where the estimated standard deviation is nonlinear and random, and likely never quite matches the true probability. His original work in the 1990s focused on asymptotic theory for dependent random variables, the classical form of asymptotic theory, but he gradually realized that he could apply asymptotic theory to self-normalized variables, which are arbitrary and random.

Although he gets satisfaction from a precise and elegant solution to a theoretical problem, Professor Shao also hopes his research has real-world application. His theory has been used, for example, in controlling the false discovery rate when testing whether tamoxifen therapy is effective in identifying genes that lead to certain diseases.

Biologists also applied his research in studying the evolution of tumours, seeking to improve the selection of which drug to use to treat them. The researchers of that topic built on his theory with "work [that] contributes to a mathematical understanding of intratumour heterogeneity, and is also applicable to organisms like bacteria, agricultural pests, and other microbes."

Professor Shao is the author of two books on handling very large data sets: Self-Normalized Processes, the first book on self-normalized large deviation theory parallel to Cramér's work, written with Columbia University professor Victor de la Peña and Stanford University's Tze Leung Lai; and Normal Approximation by Stein's Method, a tome on handling big data written with Louis H.Y. Chen from the National University of Singapore and Larry Goldstein of the University of Southern California.

As the field of "big data" develops, Shao's work will apply to a large number of fields where it is necessary to examine extreme risks or calculate small probabilities. Financial crises, for instance, normally stem from very unlikely events that have outsized impact. "It is important to estimate those extremely rare probabilities," Professor Shao says.

By Alex Frew McMillan

This article was originally published on CUHK Homepage in May 2015.